I’d like to start this blog by discussing my first Kaggle data science competition – specifically, the “GigaOM WordPress Challenge”. This was a competition to design a recommendation engine for WordPress blog users; i.e. predict which blog posts a WordPress user would ‘like’ based on prior user activity and blog content. This post will focus on how my engine used the WordPress social graph to find candidate blogs that were not in the user’s direct ‘like history’ but were central in their ‘like network.’

My Approach

My general approach – consistent with my overkill analytics philosophy – was to abandon any notions of elegance and instead blindly throw multiple tactics at the problem. In practical terms, this means I hastily wrote ugly Python scripts to create data features, and I used oversized RAM and CPU from an Amazon EC2 spot instance to avoid any memory or performance issues from inefficient code. I then tossed all of the resulting features into a glm and a random forest, averaged the results, and hoped for the best. It wasn’t subtle, but it was effective. (Full code can be found here if interested.)

The WordPress Social Graph

From my brief review of other winning entries, I believe one unique quality of my submission was its limited use of the WordPress social graph. (Fair warning: I may abuse the term ‘social graph,’ as it is not something I have worked with previously.) Specifically, a user ‘liking’ a blog post creates a link (or edge) between user nodes and blog nodes, and these links construct a graph connecting users to blogs outside their current reading list:

Defining user-blog relationships by this ‘like graph’ opens up a host of available tools and metrics used for social networking and other graph-related problems.

Node Distance, a.k.a. Two Degrees of Separation

The simplest of these graph metrics is the concept of node distance within graphs. In this case, node distance is the smallest number of likes required to traverse between a particular user node and a particular blog node. In the diagram above, for example, User A and Blog 4 have a node distance of 3, while User C and Blog 5 have a distance of 5.

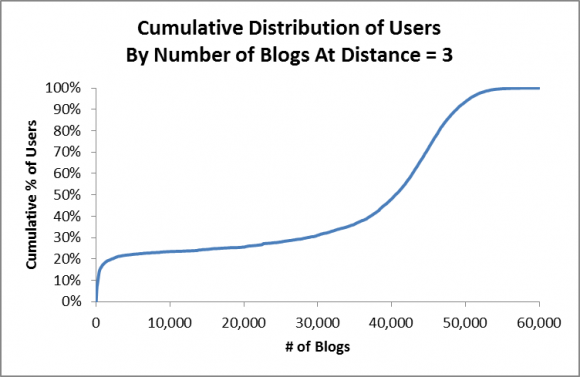

The chart below breaks down likes from the last week of the final competition training data (week 5) by the node distance between the user and the liked blog within their prior ‘like graph’ (training data weeks 1-4):

As you can see, nearly 50% of all new likes are from blogs one ‘edge’ from the user – i.e., blogs the user had already liked in the prior four weeks. These ‘like history’ blogs are a small, easily manageable population for a recommendation engine, and there are many relevant features that can be extracted based on the user’s history with the blog. Therefore, the like history set was the primary focus of most contest submissions (including mine).

However, expanding the search for candidates one more level – to a distance of 3 edges/likes traversed – encompasses 90% of all new likes. A ‘distance 3’ blog wold be a blog that is not in the subject’s immediate like history but that is in the history of another user who had liked at least one blog in common with the subject. This is admittedly a large space (see below), but I think it significant that >90% of a user’s liked blogs in a given week can be found by traversing through just one common reader in the WordPress graph. Finding the key common readers and common blogs, therefore, is a promising avenue for finding recommendation candidates that are new to the subject user.

Node Centrality, a.k.a. Finding The Common Thread

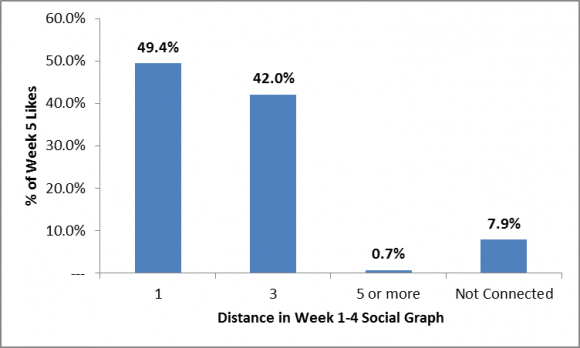

As referenced above, the main problem with using the distance 3 blogs as recommendation candidates is that the search space is far too large – most users have tens of thousands of blogs in their distance 3 sets:

As seen from the above chart, while a significant portion of users (~20%) have a manageable distance 3 blog set (1,000 to 2,000 blogs), the vast majority have tens of thousands of blogs within that distance. (Some post-competition inspection shows that many of these larger networks are caused by a few ‘hyper-active’ users in the distance 3 paths. Eliminating these outliers could be a reasonable way to create a more compact distance 3 search set.)

As seen from the above chart, while a significant portion of users (~20%) have a manageable distance 3 blog set (1,000 to 2,000 blogs), the vast majority have tens of thousands of blogs within that distance. (Some post-competition inspection shows that many of these larger networks are caused by a few ‘hyper-active’ users in the distance 3 paths. Eliminating these outliers could be a reasonable way to create a more compact distance 3 search set.)

One could just ignore the volume issues and run thousands of distance 3 blog candidates per user through the recommendation engine. However, calculating the features and training the models for this many candidate blogs would be computationally intractable (even given my inclination for overkill). To get a manageable search space, one needs to find a basic, easily calculable feature that identifies the most probable liked blogs in the set.

The metric I used was one designed to represent node centrality, a measure of how important a node is within a social graph. There are many sophisticated, theoretically sound ways to measure node centrality, but implementing them would have required minutes of exhaustive wikipedia reading. Instead, I applied a highly simplified calculation designed to measure a blog node’s three-step centrality within a specific user’s social graph:

- Step 1(a): Calculate all three-step paths from the subject user in the graph (counting multiple likes between a user and blog and multiple possible paths);

- Step 1(b): Aggregate the paths by the end-point blog; and

- Step 1(c): Divide the aggregated paths by the total paths in step 1(a).

- Step 2: ???

- Step 3: Profit.

The metric is equivalent to the probability of reaching a blog in three steps from the subject user, assuming that at each outbound like/edge has an equal probability of being followed. It is akin to Google’s original PageRank, except only the starting user node receives an initial ‘score’ and only three steps are allowed when traversing the graph.

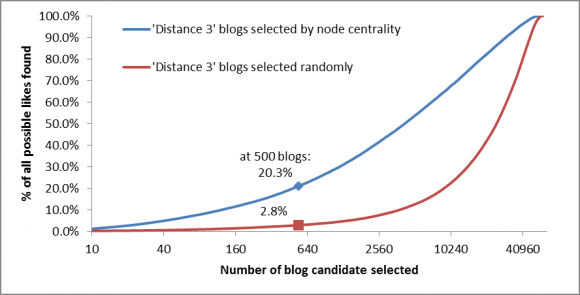

I don’t know if this is correct or theoretically sound, but it worked reasonably well for me – substantially lifting the number of likes found when selecting candidates from the distance 3 graph:

As shown above, if you examine the first 500 distance 3 blogs by this node centrality metric, you can find over 20% of all the likes in the distance 3 blog set. If you selected 500 candidates by random sample, however, only 3% of the likes from this population would be found. While I am certain this metric could be improved greatly by using more sophisticated centrality calculations, the algorithm above serves as a very useful first cut.

Some Very Simple Code

I’d feel remiss not putting any code in this post. Unfortunately, there was a lot of bulky data handling code I used in this competition to get to the point where I could run the analysis above, so posting the code that produced this data would require a lot of extra files. I’d happily send it all to anyone interested, of course, just e-mail me.

However, in the interest of providing something, below is a quick node distance algorithm in Python that I used after the fact to calculate node distances in the like graph. This is just a basic breadth-first search implemented in Python, with the input graph represented as a dictionary with node names as keys and sets of connected node names as values:

def distance(graph, start, end): # return codes for cases where either the start point # or end point are not in the graph at all if start not in graph: return -2 if end not in graph: return -3 # set up a marked dictionary to identify nodes already searched marked = dict((k, False) for k in graph) marked[start] = True # set a FIFO queue (just a python list) of (node, distance) tuples

queue = [(start, 0)] # as long as the queue is full... while len(queue): node = queue.pop(0) # if the next candidate is a match, return the candidate's distance if node[0] == end: return node[1] # otherwise, add all the nodes connected to the candidate if not already searched # mark them as searched (added to queue) and associate them with candidate distance + 1 else: nextnodes = [nn for nn in graph.get(node[0], set()) if marked[nn] == False] queue.extend((nn, node[1]+1) for nn in nextnodes) marked.update(dict((nn, True) for nn in nextnodes)) # if you fall through, return a code to show no connection return -1

Conclusion

In the end, this node centrality calculation served as a feature in my recommendation engine’s ranking and – more importantly – as a method of identifying the candidates to be fed into the engine. I have not done the work to see how much this feature and selection method added to my score, but I know as a feature it added 2%-3% to my validation score, a larger jump than many other features. Moreover, my brief review of the other winner’s code leads me to think this may have been a unique aspect of my entry – many of the other features I used were closely correlated elements in the other’s code.

More crucially, for actual implementation by Automattic the ‘like graph’ is a more practical avenue for a recommendation engine, and is probably what the company uses to recommend new posts. Most of the work we did in the competition differentiated between posts from blogs the user was already reading – useful, but not a huge value-add to the WordPress user. Finding unseen blog posts in which a user may have interest would be a more relevant and valuable tool, and finding new blogs for the user with high centrality in their social graph is a reasonable way to find them. From my work on the competition, I believe these methods would be more promising avenues than NLP and topic-oriented methods.

The above posts covers all of my thinking in applying network concepts to the WordPress challenge problem, but I am certain I only scratched the surface. There are a host of other metrics that make sense to apply (such as eccentricity, closeness and betweenness). If you work for WordPress/Automattic (and that is the only conceivable reason you made it this far), I’d be happy to discuss additional ideas, either on this blog or in person.

Thanks! Learned a lot! I really want to know how do you make the final ranking. Do you treat this recommendation as a classification or regression problem, as you mentioned rf in your blog. If it was, how do you choose negative samples in your train model?

Thank you for being my first reader!

I treated the recommendation as a yes/no classification problem. I selected user-post combinations from a response period in the training set (i.e., fifth week posts) based on the criteria from the stimulus period (posts and ‘likes’ in weeks 1-4). The criteria for a given user were:

A different logistic regression model was applied to posts from each of these three criteria. A single random forest model was applied to the entire pool. I took a simple average of the result, and ranked accordingly. (Based on the evaluation criteria for the competition, only ranking accuracy mattered to the final score.)

I’m confident most of my competition score came from feature selection vs. modeling method. The above yielded a total of ~10 million samples, so testing numerous different methods (or even doing a step-wise selection on the glm’s) would require subsampling the pool. While gains could be had from this analysis, I think the gains from additional feature exploration would be far greater.

Thanks again for your comment!

Thank your for your post and answers but could you explain a little bit more about LGM you’ve used? What were the parameters of each post?

I found really great lecture about regression (http://see.stanford.edu/materials/aimlcs229/cs229-notes1.pdf) and now trying to figure out usage on your example.

Thanks!

Very interesting stuff. I didn’t put a serious effort into this competition, but I did look at the data and this is a nice writeup that addresses a lot of the issues I raised to myself when I thinking about it.

You say that you think most of your score probably came from feature selection. With such a long feedback loop, it seems like there was limited opportunity to perfect features. In a competitive setting like this, do you thing at some point there was an element of luck in happening to select the best features, or do you think you had a systematic advantage in that respect over other competitors?

Great first post! I look forward to reading more of your stuff.

Thanks for your reply, Jeff.

The feedback loop on this competition was long, and some features (particularly language processing features) required significant calculation time. I would have liked to try about five times as many features (or versions of my features) as I did. I’d like to think that my amazing intuition about user behavior guided me to the best features, but I’m guessing luck entered it at least a little. (In fact, my last candidate feature, added on the last day, took me from 3rd to 1st on the public leaderboard. If I had by chance selected a different candidate to add, it would have significantly impacted my placement.)

Thanks for reading. I’ll try to add more content soon.

Thanks to Kaggle and people like you, power of “overkill analytics” where you care about prediction power but not about explanation and ‘intuition’ of variables, is finally being realized. I’m happy!

Thanks! That’s definitely the core of what I mean by ‘overkill analytics’ – results are the key, and should trump any notions of theory, elegance, or knowing what the hell you are doing.

I would not dismiss ‘intuition of variables’ necessarily, though; understanding which data features should have a real-world impact on a problem is a massive boost to any modeling effort. What I think you are getting at, and I agree, is that we traditionally overvalue final results that match our intuition, or ‘make sense’, and assume these will hold when predicting from new data. Intuition is a very weak weapon to use against overfitting.

Hi Carter,

Great writeup.

One of the things you touch on that I’ve been thinking about a lot since the competition ended is the bias in the competition towards those blogs that a user had already been reading. In order to like something you have to have read it.

As you mention, it is possibly more useful to users to recommend content they are not already following. But filtering content they are already subscribed to seems like it could help cut down on noise for them as well.

I also really want to know how that 7.9% of likes that had “no connection” got generated.

It sounds like you generated that from the 6 week data. Did you compare that data to the aggregate 6 month like data to see if any of that 7.9% had liked the blog at some time further in the past? I think the user to blog distribution should indicate that.

-Greg

Greg:

Thanks for reading.

I agree the competition’s aim – finding out what a user did like – may not be fully translatable to a recommendation engine’s aim – finding out what a user might like. Still, there’s a lot of value in the information from the competition’s algorithms for a recommendation engine. Particularly, how likely a user is to like a content on a ‘known’ blog is a great proxy for the connection between the user and that content. These values could be used as weights or guides for further content searches, either to bias NLP queries towards ‘high connection’ content or select ‘edges of interest’ in the ‘like graph’. A weighted centrality measure, using a competition-calculated probability of user liking a known blog as the weight, may significantly outperform the crude centrality measurement discussed above in finding new content.

As for filtering prior content, I’d suggest a ‘point system’ for training a recommendation engine. Recommending a post from an ‘unseen’ blog gets a high positive weight if correct, minimal cost if wrong. If the user has extensive history with the blog, the recommendation engine gets less credit, and/or a greater cost if wrong. The parameters would need to be tweaked, but this should yield engines biased towards promoting new content and extending user connections.

Finally, while I used historical links in the competition, I did not use it in the graph calculations or in the data above. I think a very large part of the 7.9% above would show up at distance 1 or 3 with historical data included. On the other hands, some of the distance 3 connections are trivial in nature – a few users liked almost everything and served to link other users to quite unrelated blogs. I’d say about 20% to 40% of the likes in distance 3 (or 10% to 20% of total likes) fall into this category (though I didn’t run the numbers).

Long comment, but it is your data so I want to make sure I transfer what I learned. Feel free to follow-up with me here or offline with any other questions.

Thanks!

I really appreciate all the thought you’ve put into this, its a very useful analysis that we hadn’t done too much of.

We’re pretty sure that the users with thousands of likes are spam (splikes), I considered removing them for the competition, but wasn’t sure what cutoff to use.

I’ll follow up more when I can get more time to dig through everyone’s code.

[…] only was this Carter’s first win, it was also his first contest. You can read the detailed explanation of his victory on his blog, but the gist is that he didn’t get too involved with complex social graphing to […]

[…] only was this Carter’s first win, it was also his first contest. You can read the detailed explanation of his victory on his blog, but the gist is that he didn’t get too involved with complex social graphing to […]

Hi,

very nice…

Few things I would like Carters view on:

-Overkill – if I told my phd friend that science should use more intuition rather than reducing everything to such atomic fine grained ‘things’ do you think he would be still be that inclined to by me a beer after work on a friday? enter systems thinking…

-Would you class network theory as a branch of systems theory?

-Did you notice any hubs or connectors when looking at the data in terms of the connectors – did you actually run any ‘topology’ models?

-Did you have a steer towards randomness or weak/strong ties?

-Are you able to look at the contents of the blog and maybe tie in some external happenings in the world relevant to the time window of the blog – e.g. financial crash etc.

Does the comp have anything to locks down the findings in that you could submit them to a relevant educational/science institute that is looking into network theory?

thanks

Dean

Dean:

Sorry for the late reply, just returning from vacation.

I hope you will still get that beer from your phd friend. I emphasize intuition because I think ‘good enough’ heuristics usually attain the lion’s share of actual benefit in predictive modeling problems. We still need the precise explanations from your friend to inform how we develop those heuristics. In other words, I like to take shortcuts – but I need the real scientists to show me where it is I am taking a shortcut to.

I doubt I’m qualified to opine on the taxonomy of network theory, but I would put it under systems theory. However, most of the work done in problems like uses relatively static views of the network, whereas what little I know about systems theory is focused on more dynamic processes.

The biggest hubs and connectors in the data were false – ‘spam bot’ users who liked thousands of posts in order to sell Viagra. I did not filter them from the node distance and centrality calculations due to time, but I think doing so would have added significantly to the effectiveness of these metrics.

Only a short time frame was open in the contest, so I don’t know if any world events of significance would have influenced activity. Would be fascinating to see with a longer time frame.

Finally, I’m using this blog to try to get out information about what I used in the contest. My day job and night job (2 young sons) makes any more elaborate publishing unfeasible. That being said, I may look into presenting a more unified view of the findings at relevant conferences, if anyone’s interested.

Great Content…

A powerful share, I just given this onto a colleague who was doing somewhat evaluation on this. And he in actual fact purchased me breakfast as a result of I located it for him.. smile. So let me reword that: Thnx for the deal with! Nonetheless yeah Th…

I am confused, how did you find the first ‘like-graph’, with Masimum Likelihood ? Is it given in the competition’s documents? Why does User B have 3 edges unlike the others? Thanks.